On Google Analytics

On the web, the "traditional" method of visitor tracking is at the origin web server. This is perfectly reliable and trustworthy: it is impossible to visit a site without contacting the origin server1, and no other parties need be involved. Tools such as webalizer perform logfile analysis and produce visitor statistics.2

However, in the past decade a new model of visitor tracking has achieved prominence: third-party tracking via javascript, often with a fallback to transparent 1x1 GIFs ("web bugs"). This model is exemplified by the most widespread tracking tool, Google Analytics.

Google Analytics (GA) is overwhelmingly popular. At the time of writing, approximately half of the web uses GA.3 There is no user benefit to participating in GA tracking, but there are costs both in resource usage and in information leakage. As a consequence, google-analytics.com is also one of the most blacklisted domains on the web.

How blacklisted? Here is a sampling of popular ad-blocking and other privacy-centric browser addons, along with their approximate userbase.

- AdBlock: over 40 million users (Chrome).

- Adblock Plus: 20,320,308 users (Firefox).

- Adblock Edge 731,135 users (Firefox).

- NoScript: 2,171,006 users (Firefox), plus every installation of Tor Browser (estimated 2 million daily users).

- Ghostery: 1,278,423 users (Firefox), 1,431,362 users (Chrome).

All of the above tools block GA by default. This is not counting other, more niche tools, or the individuals and organisations who block GA manually in their browsers or at their firewalls.

This is not a negligible number of people. Notwithstanding the fact that an awful lot of people seem to not want GA to be a part of their browsing experience, these are all people who will be invisible to GA, and absent in the data it provides. That it is possible (and even desirable) to opt out of third-party tracking undermines the entire concept of third-party tracking.

That Google offers such a deeply flawed service is easy to understand. The question for Google is not whether the data it collects from GA is complete, but whether it gets to collect it at all. Google is the biggest infovore in history: of course websites outsourcing their tracking to Google is a win for Google.

And yet, in part because it is easy to use, GA is incredibly widespread. Given its popularity, GA would perhaps seem to be a win for site operators too. I think not, and here's why: deploying GA sends a message. If a site uses GA, it seems reasonable to conclude the following about the site operator:

- You care about visitor statistics, but not enough to ensure that they are actually accurate (by doing them yourself).4 Perhaps you don't understand the technology involved, or perhaps you value convenience more than correctness. Neither bodes well.

- Furthermore, you're willing to violate visitors' privacy by instructing their browsers to inform Google every time they visit your site. Since in return you get only some questionable statistics, it seems visitors' privacy is not important to you.

If you run a website, this is probably not the message you want to be sending.

[1] The increasing use of CDNs to serve high-bandwidth assets such as images and videos does not change the fact that 100% of visits to a modern, dynamic site pass through the origin server(s). Frontend caches such as Varnish merely change the location of logs, not their contents or veracity.

[2] There are very probably more modern server-side tools available nowadays; I've been out of this game for a while now. Certainly CLF leaves a lot to be desired. But since web servers have all the data available to them this is strictly a quality-of-implementation issue.

[3] http://w3techs.com/technologies/overview/traffic_analysis/all claims 49.9%. http://trends.builtwith.com/analytics/Google-Analytics confirms this number, and offers a breakdown by site popularity. Of the most popular 10,000, 100,000 and 1,000,000 sites, the proportion using GA rises from 45% of the top million to nearly 60% of the top ten thousand.

[4] This is an assumption. Deploying GA does not preclude the option of tracking visitors at your web server. But since web server tracking is strictly better than GA, why would anyone bother to use both?

SSL (mis)adventures

So I've been meaning to set up SSL on here for a while now—the web being unencrypted by default these days is just silly—and reading this gave me the impetus to give it a try. ($0, you say? Under an hour, you say? Sounds good to me!) My experiences were... frustrating.

Step 1: Register with StartSSL. After I grudgingly gave them all my personal information, I was provided with a client certificate, which my browser (Chromium) promptly rejected. "The server returned an invalid client certificate. Error 502 (net::ERR_NO_PRIVATE_KEY_FOR_CERT)". The end.

Since the auth token they emailed me only worked once, I couldn't try using another browser. So, unsure what to do (and thinking they might appreciate knowing about problems people have using their site, so they can fix them or work around them or even just document them), I fired off an email.

The response I got was less than helpful: "I suggest to simply register again with a Firefox. Make sure that there are no extensions in Firefox that might interfere with the client certificate generation." Gee thanks, I would never have thought of that. And nope, I can't register in Firefox, my email address already has an account associated with it. Perhaps naïvely, I thought StartSSL might frown on people creating multiple accounts (or might like to take the opportunity to purge accounts that will never be used because their owners can't access them), which was why I didn't just create a second account using a different address in the first place. Still, lesson learned, second account created, no problems this time round. Bug fixed for the next person to come along? Not so much.

Step 2: Validate my domain. Going into this I was thinking "Hmm, will I need to set up a mail server and MX record so I can prove I can receive mail at my domain? Will the email address WHOIS has suffice? What address does WHOIS have, anyway?"

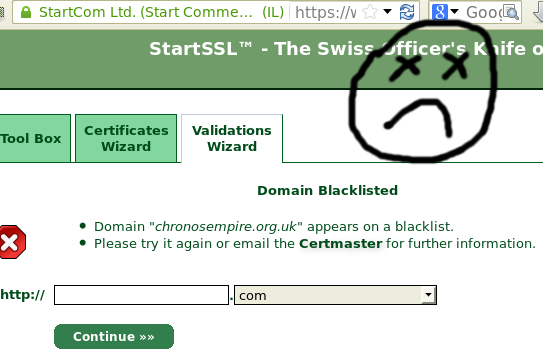

This was premature. Apparently the domain chronosempire.org.uk is blacklisted. Sadness. Not having any clue why, I fired off another email. Turns out it's Google. Google blacklisted me, claiming

"Part of this site was listed for suspicious activity 9 time(s) over the past 90 days."

This was premature. Apparently the domain chronosempire.org.uk is blacklisted. Sadness. Not having any clue why, I fired off another email. Turns out it's Google. Google blacklisted me, claiming

"Part of this site was listed for suspicious activity 9 time(s) over the past 90 days."

Nine times? WTF, Google?

The reply continued: "Unfortunately we can't take the risks if such a listing appears in the Class 1 level which is mostly automated. We could however validate your domain manually in the Class 2 level if you wish to do so.". I am confused as to what risks there are to StartSSL (I thought they were only verifying my ownership of the domain, which I'm pretty sure is not in doubt), and how those risks would go away if I paid them more than $0 for a Class 2 cert.1

Still, StartSSL is just the messenger here. Google recommends I use Webmaster Tools to find out more, so I dig out my rarely-used Google account, get given an HTML file to put in my wwwroot, let Google verify I've done so, and finally I find out what this is about.

I have a copy of Kazaa Lite in my (publicly-indexed) tmp directory. Apparently some time around June 2004 I needed to send it to someone, and it's been there ever since.2 This should not come as any surprise to anyone who knows of my involvement in giFT-FastTrack, but more to the point, Kazaa Lite is not malware. Not only is it not malware, it not being malware is the entire reason for Kazaa Lite's existence.

Sadly, whether it is or is not malware is irrelevant. "Google has detected harmful code on your site and will display a warning to users when they attempt to visit your pages from Google search results." Nice. So now I have to refrain from putting random executables in my tmp dir in case they make Google hate me? (Total hits for the file in question over the past few months: 14. Hits that weren't Googlebot: zero. In fact, I'm pretty sure not a single actual human has fetched it in the past, say, five years.)

Anyway. A quick dose of pragmatism and chmod later and my site is squeaky-clean! Now I guess I have to wait 90 days for Google to concur. Which is perhaps just as well, as I've already spent substantially more than an hour on this, I've not even started configuring my web server or making a CSR, and my enthusiasm is as low as the number of people desperate for my copy of Kazaa Lite.

[1] Maybe I'm being overly cynical here and they would actually use the money to check... something? What? I have no idea.

[2] I firmly believe in not breaking URLs unnecessarily. That's my story and I'm sticking to it. It has nothing whatsoever to do with me never cleaning up my filesystem.